Don’t Want None

One of the many current downsides of @table variables is that modifying them inhibits parallelism, which is a problem #temp tables don’t have.

While updating and deleting from @table variables is fairly rare (I’ve seen it, but not too often), you at minimum need an insert to put some data in there.

No matter how big, bad, ugly, or costly your insert statement is, SQL Server can’t parallelize it.

Dimmo

Here’s our select statement.

SELECT DISTINCT

u.Id

FROM dbo.Users AS u

JOIN dbo.Posts AS p

ON p.OwnerUserId = u.Id

JOIN dbo.Comments AS c

ON c.PostId = p.Id

AND c.UserId = u.Id

WHERE c.Score >= 5;

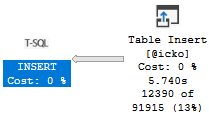

This goes parallel and runs for about 3 seconds.

But if we try to insert that into a @table variable, the plan will no longer go parallel, and will run for ~6 seconds.

DECLARE @icko TABLE (id INT);

INSERT @icko ( id )

SELECT DISTINCT

u.Id

FROM dbo.Users AS u

JOIN dbo.Posts AS p

ON p.OwnerUserId = u.Id

JOIN dbo.Comments AS c

ON c.PostId = p.Id

AND c.UserId = u.Id

WHERE c.Score >= 5;

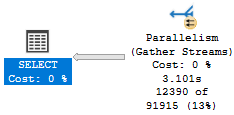

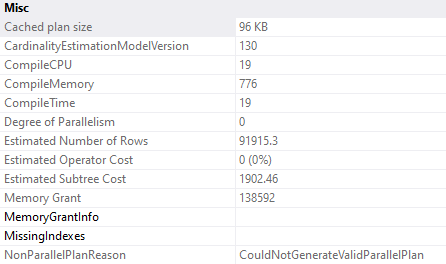

If we hit F4 to get the properties of the INSERT, well…

Let’s Say For Some Crazy Reason

You need to keep using a table variable.

Let’s say, I dunno, the crappy 1 row estimate gets you a better plan.

Or like… I dunno. Temp tables recompile too much.

I’m reaching, I know. But hey, that’s what consultants do. Have you read any blog posts lately?

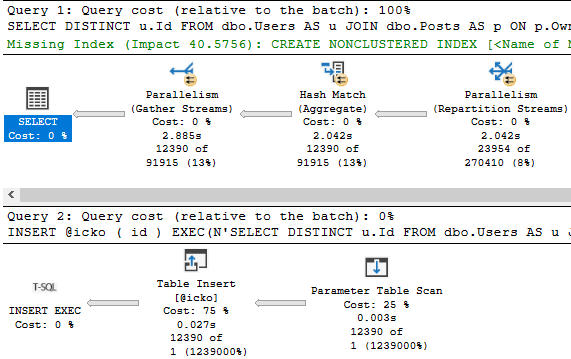

If we change our insert to this, we get parallelism back:

DECLARE @icko TABLE (id INT);

INSERT @icko ( id )

EXEC(N'SELECT DISTINCT

u.Id

FROM dbo.Users AS u

JOIN dbo.Posts AS p

ON p.OwnerUserId = u.Id

JOIN dbo.Comments AS c

ON c.PostId = p.Id

AND c.UserId = u.Id

WHERE c.Score >= 5;')

The dynamic SQL executes in a separate context, and the insert happens in… idk some magickal place.

But THAT’S PRETTY COOL, HUH?

This would also work if we put the query inside a stored procedure, or stuck the statement inside a variable and used sp_executesql.

In either case, the INSERT…EXEC pattern is your friend here.

Of course, you could just use a #temp table.

Sigh.

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly.

Good tip , thank you for sharing your knowledge!