Dirty Work

There are three classes of administrator when it comes to parallelism settings

- Never changed the defaults

- Changed the defaults to something wacky

- Changed the defaults to something safe

The beginning of this post is for the first couple of genus of administrator.

Even though Microsoft added a MAXDOP calculation to the installer starting with SQL Server 2016, I’ve seen cases where the recommended setting was wrong because hardware changed after the initial config.

As an example: Your VM admin created the smallest possible machine to get things set up for you, and added CPU and memory later on, and you ended up with MAXDOP set to 1.

The installer still doesn’t let you change Cost Threshold For Parallelism at setup, which is plum bizarre. I get why making a recommendation here is hard (and I’ll talk about that later on), but you should at least give folks the option.

I want to add this here, before we get too far along: The point of adjusting these values is not “because there’s too much parallelism” or “because there’s too much CX… waits”. The goal is to increase concurrency by only allowing queries that benefit from parallelism to use it, because parallel queries can reserve many CPU threads (DOP * parallel branches), and if you throw enough of them at a server you can end up running out of worker threads, and hitting THREADPOOL waits.

Some folks do Good Enough© with the stock advice of a max degree of parallelism of 4 to 8, and cost threshold for parallelism of 50. Others have a harder time finding a sweet spot that properly balances performance and concurrency.

But you need to start somewhere.

If you truly have no idea how to change these settings, review these example commands, but don’t just blindly run them.

EXEC sys.sp_configure

@configname = 'show advanced options',

@configvalue = 1;

RECONFIGURE;

EXEC sys.sp_configure

@configname = 'cost threshold for parallelism',

@configvalue = 50;

RECONFIGURE;

EXEC sys.sp_configure

@configname = 'max degree of parallelism',

@configvalue = 8;

RECONFIGURE;

Read the rest of the post first.

Evaluating

The easy way to see your settings and if they’re relatively sane is like so:

SELECT

c.name,

c.value,

c.value_in_use,

c.description,

socket_count =

CASE c.name

WHEN N'max degree of parallelism'

THEN osi.socket_count

ELSE NULL

END,

cores_per_socket =

CASE c.name

WHEN N'max degree of parallelism'

THEN osi.cores_per_socket

ELSE NULL

END

FROM sys.configurations AS c

CROSS JOIN

(

SELECT

osi.socket_count,

osi.cores_per_socket

FROM sys.dm_os_sys_info AS osi

) AS osi

WHERE c.name IN

(

N'cost threshold for parallelism',

N'max degree of parallelism'

);

Whenever I query sys.configurations, I get the value and value in use, because sometimes folks forget to run RECONFIGURE; after they change something.

But back to the point! Whatcha got here?

- Is Cost Threshold For Parallelism a number higher than 5 and lower than 200?

- Is Max Degree Of Parallelism an even number between 4 and 8?

If so, you probably don’t need to make any changes here, unless something else is going on.

And hey, if you’ve got one of those really awful vendor products that tells you to set MAXDOP to one, you should be using database scoped configurations for that, these days.

For most folks, this will get you to an okay place. You may have some other considerations based on the number of cores and/or sockets, and that can get pretty confusing.

Changing these setting will clear out the plan cache, so you should be a little careful when you do it, but you don’t need to restart or anything.

Thinking Harder About Cost Threshold For Parallelism

Many folks who end up here are already pretty sophisticated when it comes to SQL Server, and I’d hate to leave them hanging.

Let’s talk more about each setting, and how they interact with your server and queries.

First up, alphabetically, is cost threshold for parallelism. Right now, you can only set it at the server level. You can technically remove it from the equation by:

- Setting MAXDOP to 1

- Using undocumented trace flag 8649, or…

- Using undocumented query hint ENABLE_PARALLEL_PLAN_PREFERENCE to force a parallel plan

It would be neat if you could set it at the database level, like MAXDOP, but whatever.

One thing that comes up consistently when talking about this setting is that folks often see parallel queries with costs lower than the cost threshold for parallelism. All that means is that the serial query plan broke the threshold, and the parallel plan was chosen because it was cheaper. Cheaper can be lower than cost threshold for parallelism.

When a query executes, assuming there isn’t some parallelism-inhibiting factor in place (scalar UDFs, table variable inserts, etc.), it has to “cost” more than this threshold for a parallel plan to get considered. If it does, and the optimizer can come up with a parallel plan that’s cheaper than the serial plan, then you can pretty well count on it being chosen.

This is where things get tricky! Right off the bat!

See, query costs are all estimates, and there’s no actual counterpart to them in your query plans. If you’re anything like me, you’ve probably seen some low “cost” queries run for a really long time.

Many things contribute to pinning this setting down being difficult:

- Incorrect assumptions about too much of a CX wait (this goes for MAXDOP too)

- Untuned workloads having consistently high cost queries

- Wasting time looking at the plan cache for average costs

- Changing the setting not having an appreciable effect

- Misunderstanding what costs actually mean

What does it all mean for you? Well, usually increasing the setting from the default — which is 5 — to a meaningfully higher number, will reduce the total number of queries that are eligible for a parallel plan.

This doesn’t mean performance is doomed for those queries, heck, they may not have needed a parallel plan to begin with. The optimizer is pretty good at costing queries generally. But it’s not perfect, and that’s where generalized workload settings need to be overridden or changed.

Having fewer queries be eligible for gobbling up a whole bunch of CPU threads is one way of increasing overall concurrency. Individual query performance may change for better or worse, and you may need to raise or lower the number you picked here, but that’s part of the process.

Thinking Harder About Max Degree Of Parallelism

The second option you have to address concurrency and performance issues stemming from parallelism is setting an upper limit on the number of cores, and threads per parallel branch that a parallel query is allowed to engage and acquire.

In a way, this setting is a little easier to settle on than Cost Threshold for Parallelism, but there are some things you should be aware of:

- The parallel version of a plan isn’t just the serial version with parallelism; you can end up with a very different set of operators

- The optimizer may choose a serial plan if DOP is set too low for a parallel plan to make sense

- Whatever you set DOP to, parallel plans will use at least one extra coordinator thread to manage DOP threads

- Leaving this set to zero is only really dangerous on servers with > 8 cores

- Setting this to an odd number is generally not a good global setting, though it can be useful in edge cases for a single query

There have been times when I’ve suggested MAXDOP of 4 on servers with a single 8 core processor, but that was mostly a temporary fix until some more meaningful tuning work could be done.

It’s okay to use these settings as band aids, just don’t let them be habit-forming.

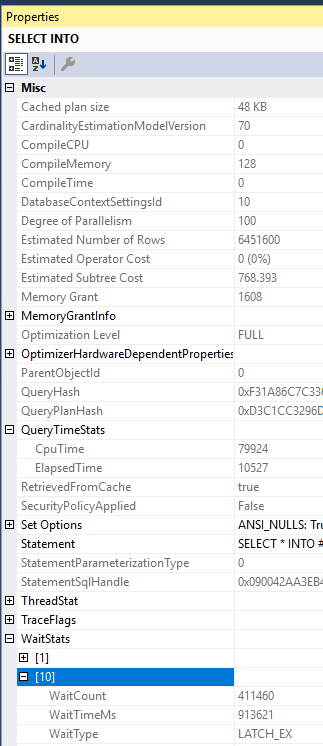

How can you tell if a parallel query plan is effective, and how can you decide if you need higher or lower DOP? Great question(s)! Look at you. I’m so proud.

- If your parallel and serial plans have roughly equivalent CPU and duration, the parallel plan isn’t better

- If your parallel plan isn’t anywhere near DOP faster than your serial query, the parallel plan probably isn’t better

- If your parallel plan hits exchange spills or deadlocks, buckle up, you’re in for a fun day (of MAXDOP 1 hints)

- If your parallel plan gets slower as you touch more data, you might want to try hinting a higher DOP

I know, it sounds kooky, but this is all stuff I have to think about and mess with when I’m tuning queries for clients. Many times, it seems like an attractive concept to force a parallel plan on a slow query, but the end result isn’t necessarily better.

It’s sort of like when I expect a query to use a nested loops join, but it doesn’t. If you hint the query to do the loop join, you might get a missing index request that lead the optimizer to think that a different join type was cheaper. Or you might just find out the optimizer was wrong. Again.

Getting back to the main point, though: parallelism is a very effective equalizer for the right kind of queries. It just doesn’t necessarily help every query.

Slug Away

To summarize and recap a little bit, here. Most SQL Server users out there will do fine following basic advice about cost threshold for parallelism and max degree of parallelism.

There will be outliers, edge cases, and times when you need to work outside of those settings for particular queries to run optimally. Heck, if a client tells me they’re running a data warehouse, the last thing I’m going to get hung up on is cost threshold for parallelism.

If you change those settings away from the defaults, and you’re still for some reason dissatisfied with the amount of CX_ waits on your server, try focusing on the queries that are going parallel and tuning them to reduce their estimated costs to lower numbers.

Sometimes those waits really are your fault.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly.