Keep It A Buck

Here are the missing indexes that SQL Server wants for our aggregation queries from yesterday:

CREATE INDEX

p2

ON dbo.Posts

(OwnerUserId, Score)

WITH

(MAXDOP = 8, SORT_IN_TEMPDB = ON, DATA_COMPRESSION = PAGE);

CREATE INDEX

c2

ON dbo.Comments

(UserId, Score)

WITH

(MAXDOP = 8, SORT_IN_TEMPDB = ON, DATA_COMPRESSION = PAGE);

I’ve taken a small bit of artistic license with them.

The crappy thing is… They really do not help and in some cases things get substantially worse.

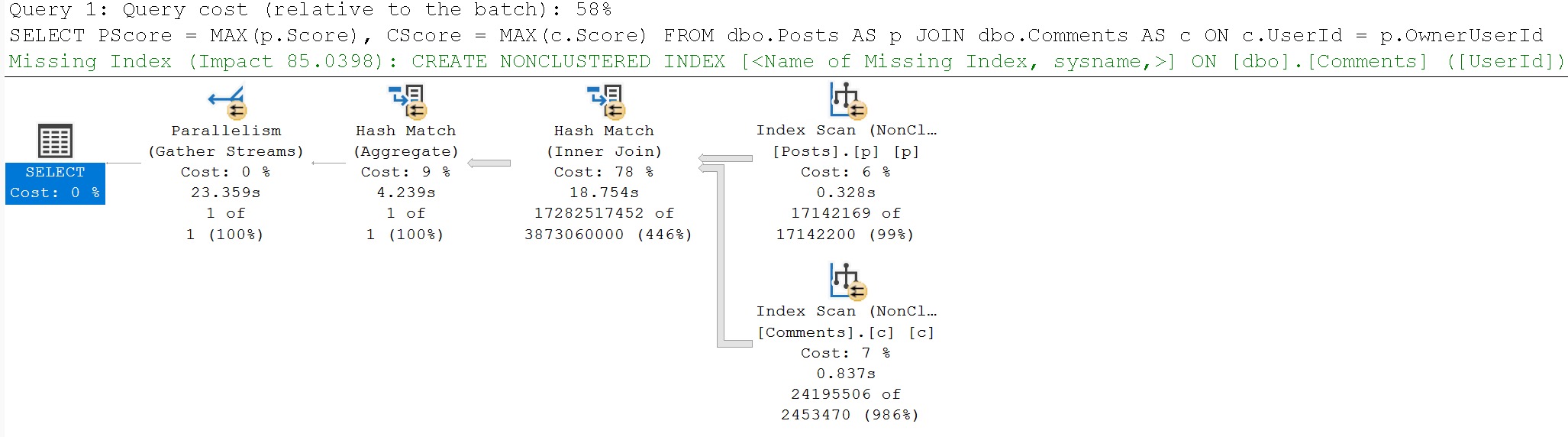

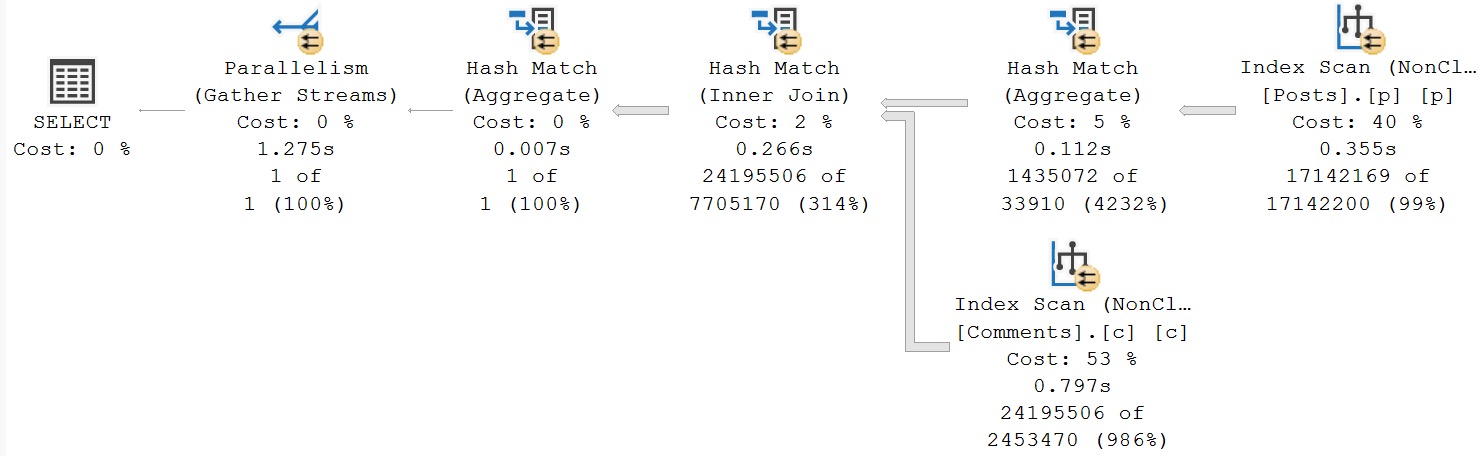

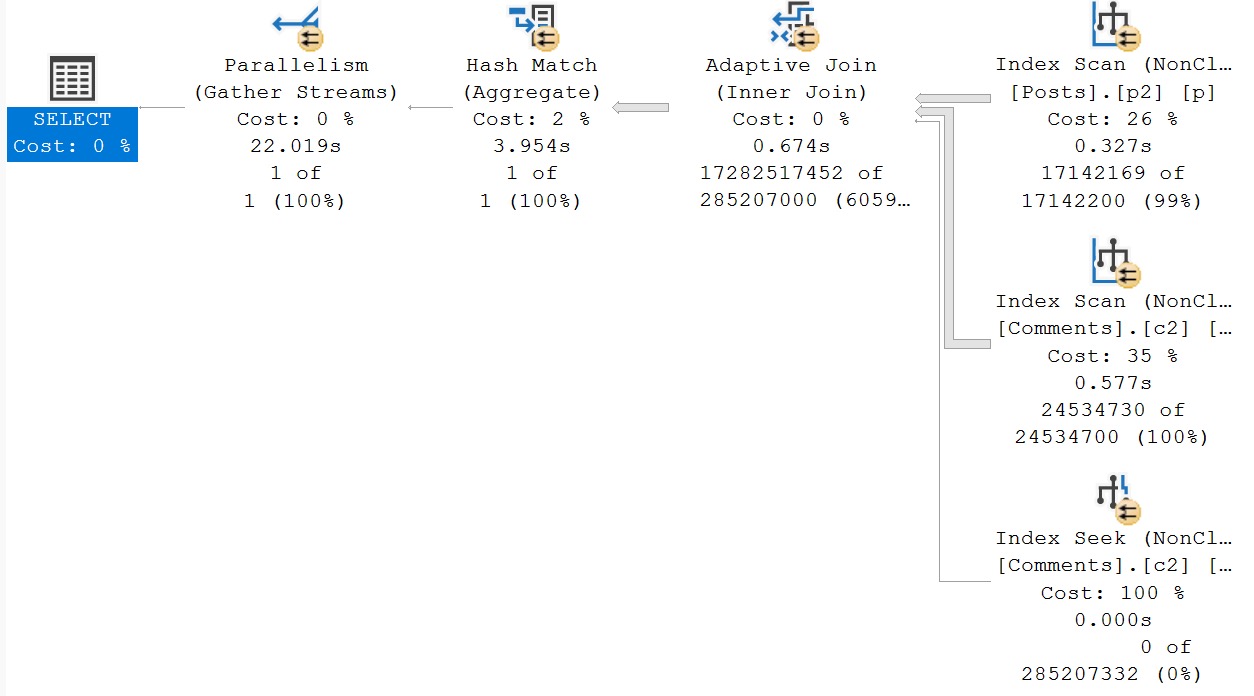

Original Query

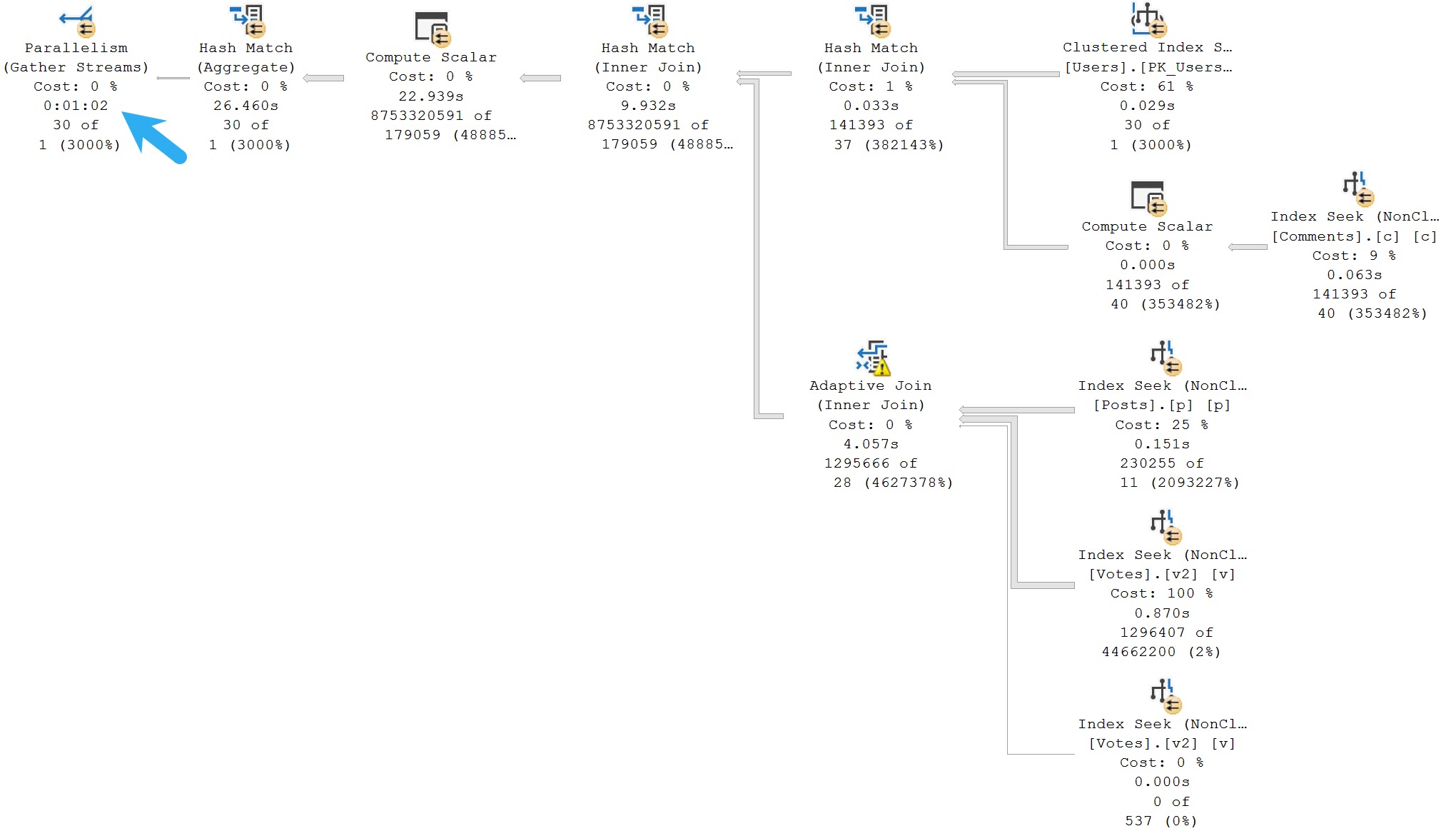

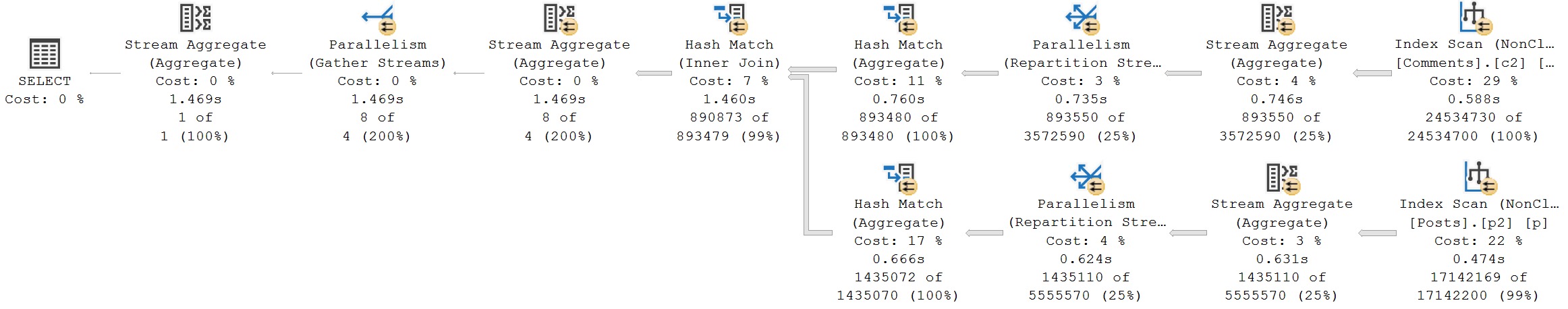

The original query plan is still awful. It is using both of our new indexes.

No early aggregation whatsoever. Though yesterday’s takes 23 seconds, and today’s takes 22 seconds, I’d hardly call ourselves indexing victors for the improvement.

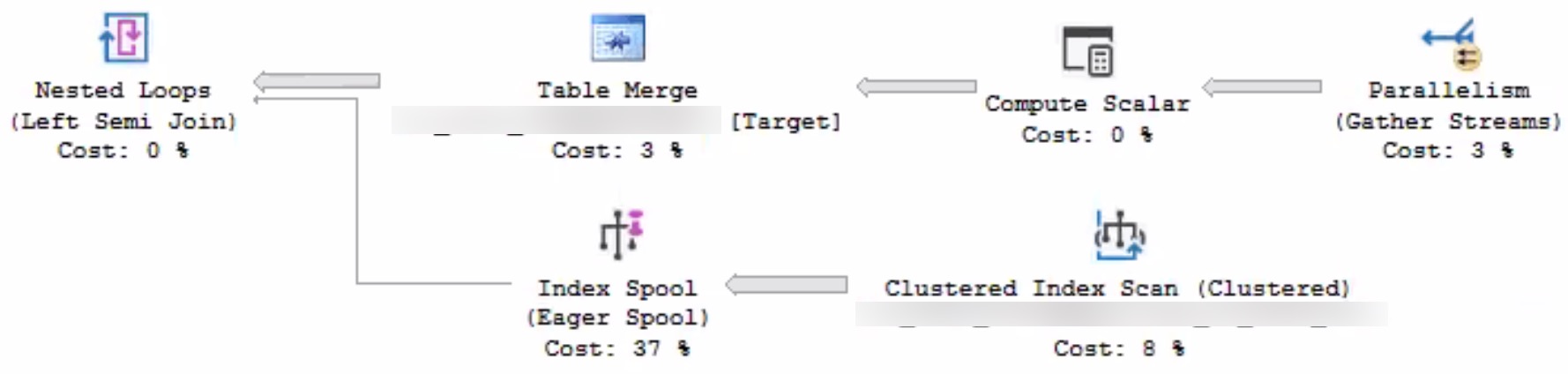

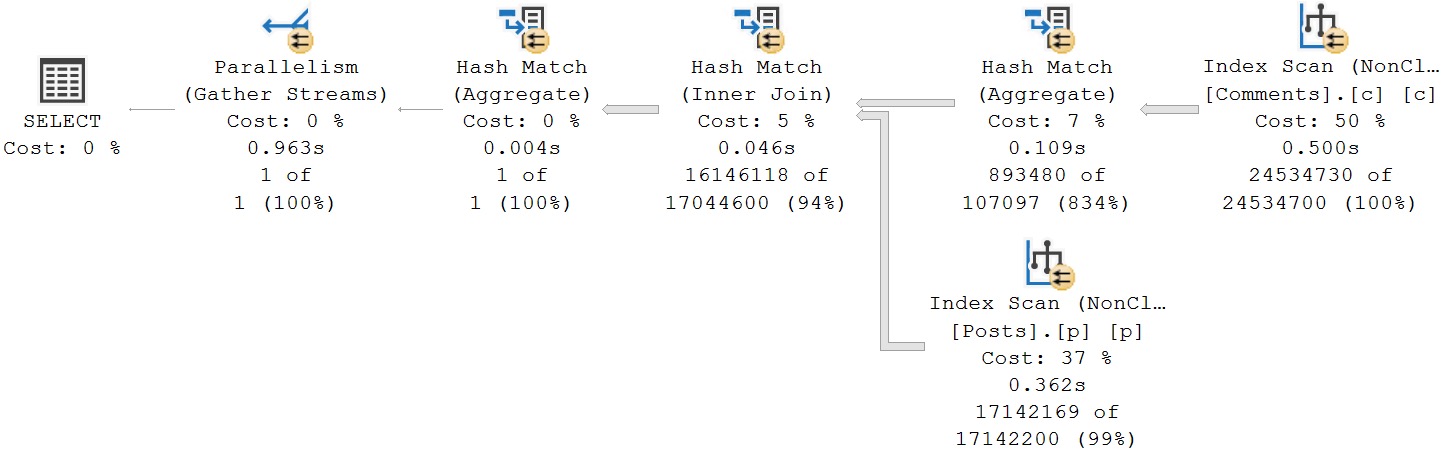

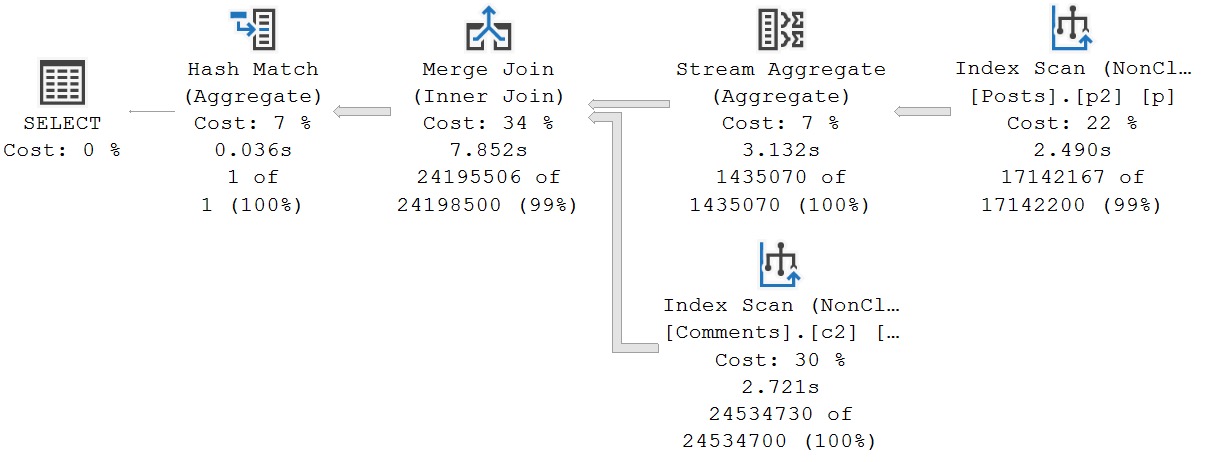

Rewrite #1: Manually Aggregate Posts

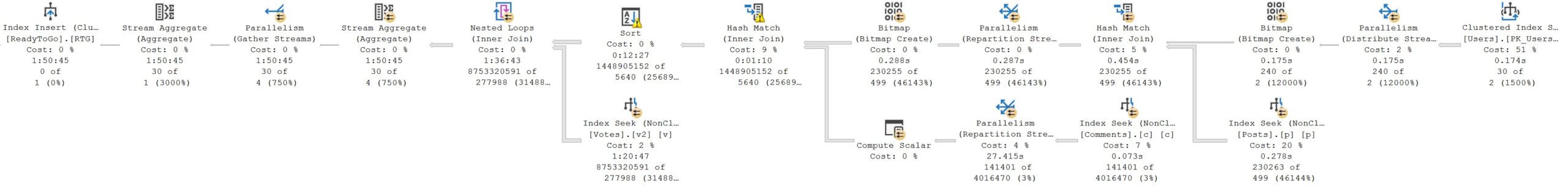

This one eats it the hardest, again, using both of our new indexes.

If one were to appreciate any aspect of this query plan, it’s that the optimizer didn’t choose a parallel merge join plan. Parallel merge joins were a mistake, and have driven me closer to alcohol induced comas than the Red Sox in the 90s.

The total runtime for this query shoots up to about 8 seconds. The biggest change, aside from a serial execution plan being chosen, is that only the Hash Match operator at the very end runs in Batch Mode. All other operators execute in Row Mode.

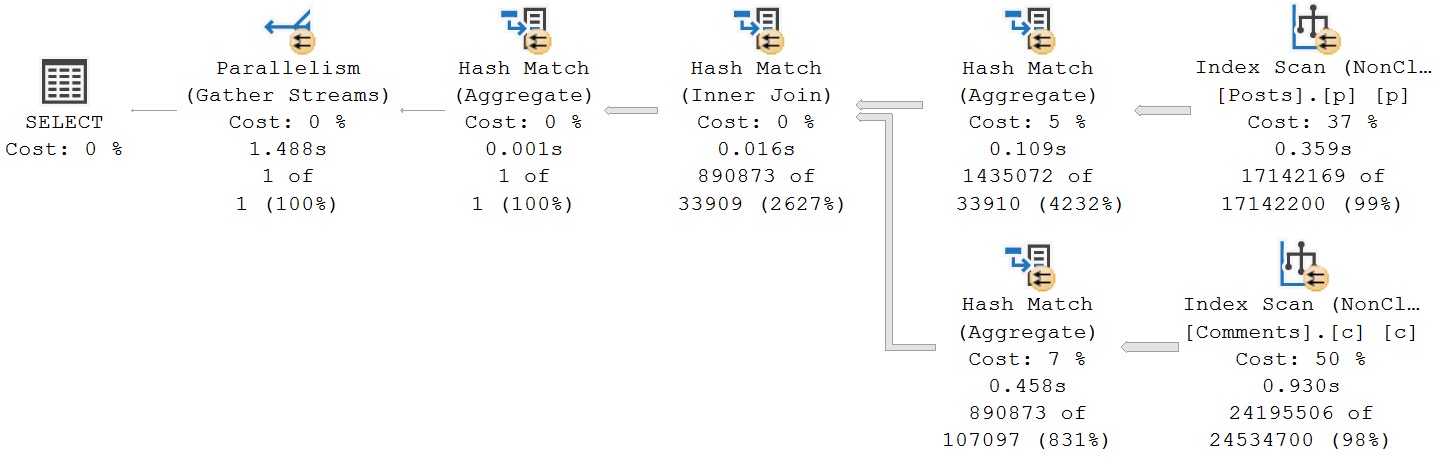

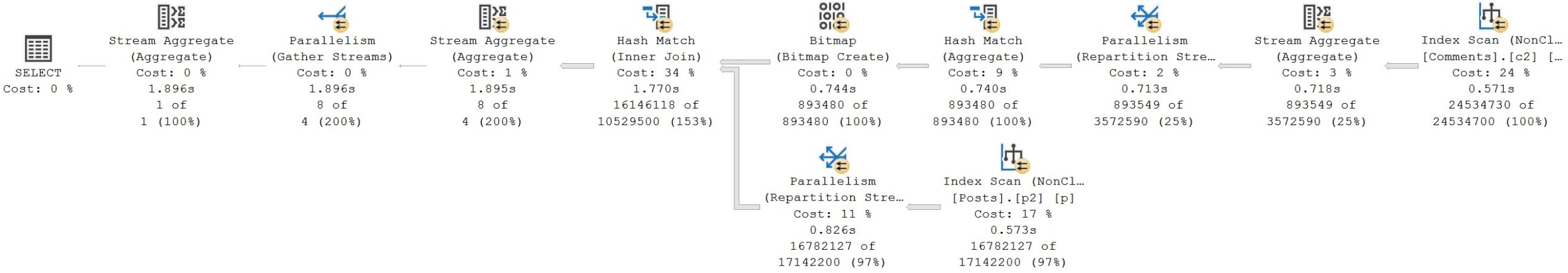

Rewrite #2: Manually Aggregate Comments

We go back to a parallel plan, but again, in Row Mode. This query now takes 2x as long as yesterday’s Batch Mode plan.

Again, both new indexes are in use here. This one is the most disappointing.

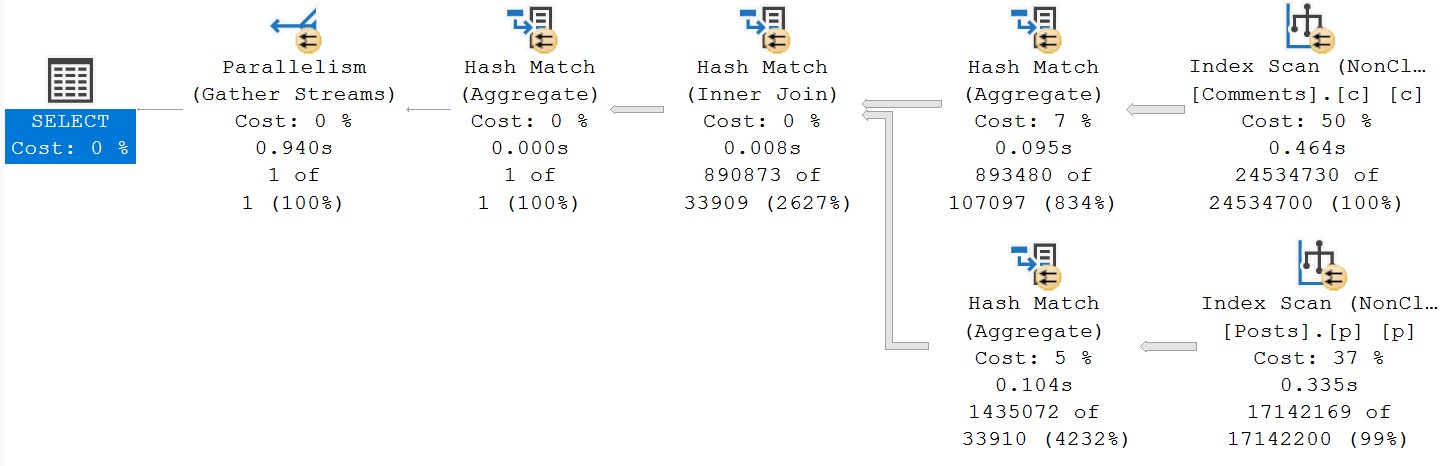

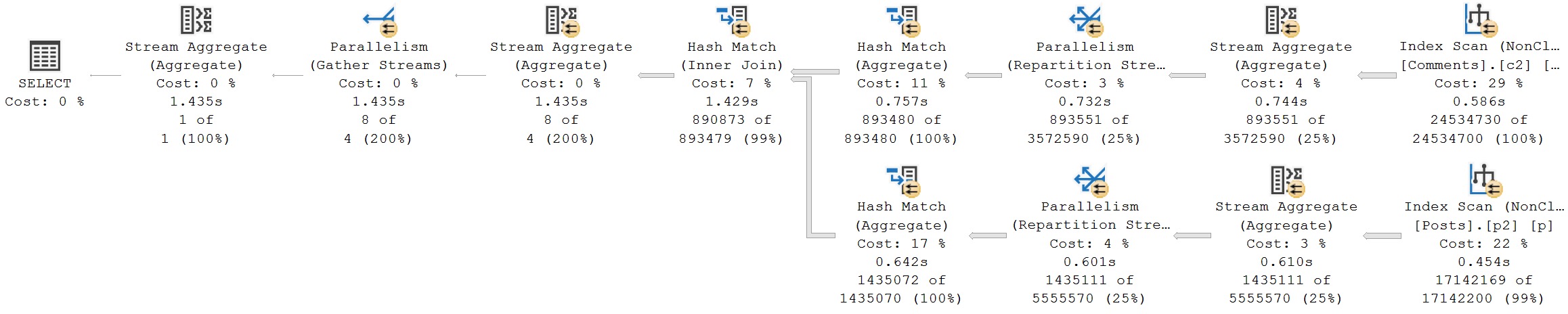

Rewrite #3: Manually Aggregate Both

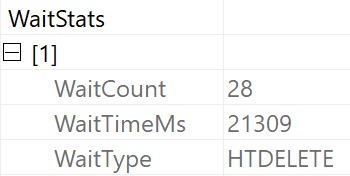

The fun thing about all of these plans is that, aside from the things I’ve been talking about, they all have the same problem as yesterday’s plans: Unless we tell SQL Server to aggregate things, it’s not trying to do that before the joins happen.

Again, the entire plan runs in Row Mode, using both new indexes. Though most of the operators are ineligible for Batch Mode, the hash operations are, but… Just don’t use it

It’s not the end of the world for this query. It runs within a few milliseconds of yesterday’s with the old indexes. It’s just disappointing generally.

Rewrite #4: Manually Aggregate Both, Force Join Order

I’m going through the motions a touch with this one, because unlike yesterday’s plan, this one uses the forced join order naturally. It ends up in a similar situation as the above query plan though.

Again, both indexes are in use, but just not helping.

It Seems Strange

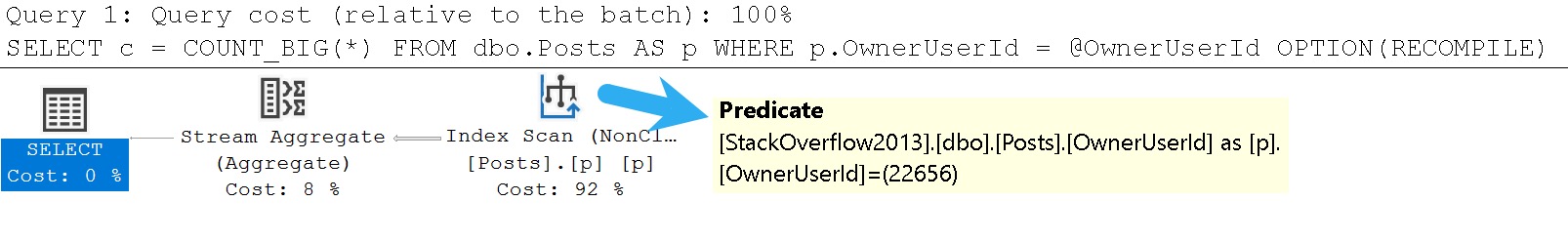

Why would SQL Server’s query optimizer decide that, with opportune indexes, Batch Mode just wouldn’t be useful?

Regardless of key column order, the same number of rows are still in play in all of my examples, with or without aggregations. In many cases, the new indexes are also scanned to acquire all of the rows, but also even the seek operators need to acquire all the rows!

There’s no where clause to help things, and only a single one of the Row Mode queries uses a Bit Map operator that can be used to filter some rows out of the joined table early.

Quite a strange brew of things to consider here. But the bottom line is, additional indexes are not always helpful for aggregation queries like this, and may result in really weird plan choices.

If you’re dealing with queries that aggregate a lot of data, and SQL Server isn’t choosing early partial or full aggregations before joining tables together, you’re probably going to have to roll up your sleeves and do it yourself.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that, and need to solve database performance problems quickly. You can also get a quick, low cost health check with no phone time required.